Who Should Care About API Performance Testing?

-

Developers: To speed up API response and effectiveness.

-

Testers/QA Engineers: To detect and rectify performance problems before deployment.

- DevOps Teams: To connect automatic performance tests with CI/CD lines.

- Business Owners/Product Managers: To confirm that the user experience is smooth and resolve downtime issues.

What is API Performance Testing?

Key Objectives of API Performance Testing

-

Ensure Fast Response Times – The API has to be quick, aiming for the user to have a smooth interaction.

-

Maintain Stability Under Load – The API does not deteriorate or stop responding while receiving a lot of requests simultaneously.

- Identify Bottlenecks & Optimize Performance – Find slow database queries, high memory usage, or inefficient algorithms.

- Validate Scalability – It is important to check whether the API can handle the increasing number of users when there is a growing demand.

-

Optimize Resource Utilization – The Behavior of the CPU, memory, and network should be watched in order to avoid waste.

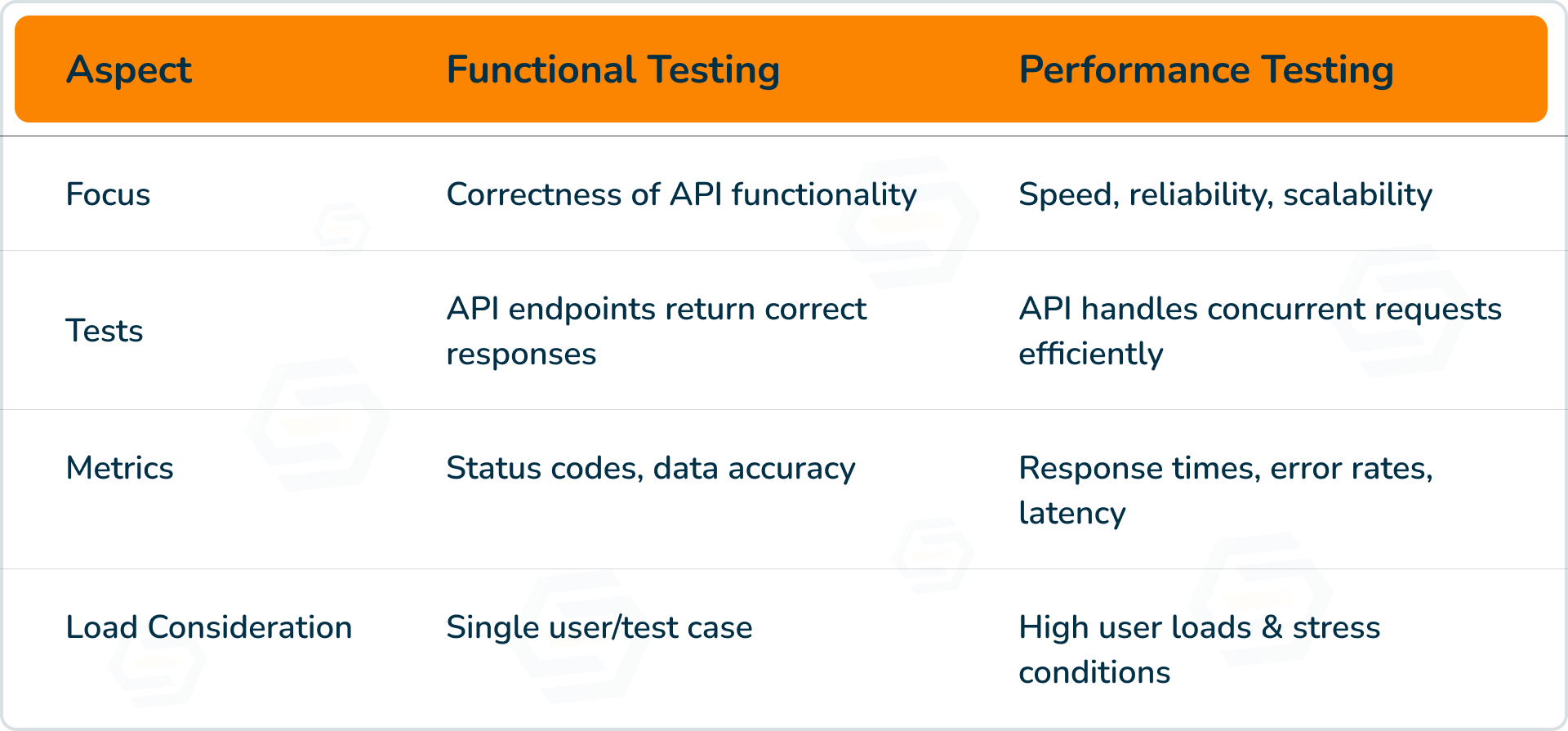

How API Performance Testing Differs from Functional Testing

API Performance Testing Process

Step 1: Define Performance Objectives

Key Considerations:

-

Response Time: Determine the acceptable range (e.g., the API must respond within 200 ms under normal load).

-

Throughput: Define the number of API requests the system should handle per second (e.g., 1,000 RPS).

- Scalability Requirements: In case of traffic increase, it should function properly and show the API ability.

- Error Rate: Specify an acceptable error rate (e.g., no more than 0.5% failure rate under peak load).

-

Resource Utilization: Set limits on CPU, memory, and network consumption.

Step 2: Choose the Right API Performance Testing Tool

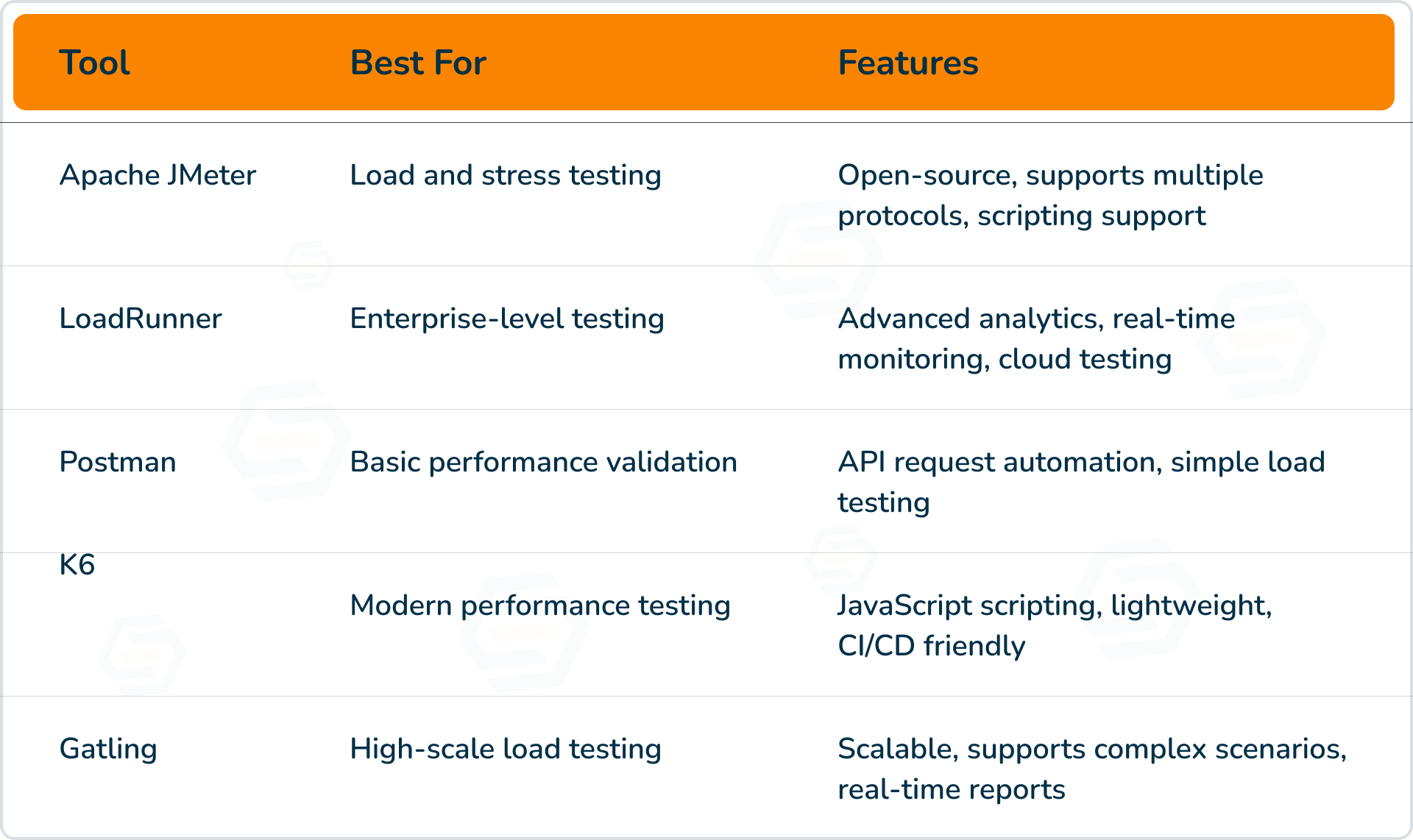

Popular API Performance Testing Tools

How to Choose the Right Tool

-

To verify if the API performance is basic, Postman is a good choice.

-

When doing high-load simulations, it would be great to use Apache JMeter or Gatling.

- If integrating into CI/CD pipelines, K6 or LoadRunner would be the best options.

Step 3: Set Up a Real-World Test Environment

Key Steps

1. Replicate Production Infrastructure

-

Use a similar server configuration (CPU, RAM, network).

-

Ensure the same database settings.

- Match the API gateway and CDN settings.

2. Simulate Realistic Network Conditions

-

Run the tests on a different bandwidth (e.g., 4G, 5G, fiber).

-

Keep in mind, however, that the latency might vary according to the location of the user.

3. Use Meaningful Test Data

-

Write more than one realistic user query to see the actual usage.

-

Add invalid and invalid request results to test API error handling.

Step 4: Design Test Scenarios

Common Test Scenarios

1. Normal Load Testing

-

Simulates predicted daily traffic flow.

-

Ensures the API tackles the flow of user activity in the usual way.

2. Peak Load Testing

-

Tests how well the API performs for peak usage hours.

-

Example: Black Friday sales for an e-commerce API.

3. Stress Testing

-

Push the API beyond its limits to determine the breaking point.

-

Helps identify if the API crashes when there are many users.

4. Spike Testing

-

Assesses sudden surges in traffic.

-

Example: A social media API is under traffic surges caused by viral content.

5. Endurance (Soak) Testing

-

Endurance (Soak) Testing is a hard process of the API that has to be kept under a steady load for an extended time.

-

Indicates errors as such, memory leaks, slowdowns, and resource depletion.

Step 5: Execute Tests and Monitor Performance

Execution Process

1. Start with Baseline Tests

-

Run the API under normal load conditions.

-

Measure initial response times and error rates.

2. Gradually Increase Load

-

An increase in traffic in stages such as 500, 1000, and 5000 concurrent users.

-

Monitor the latency, error rates, and system resource usage.

3. Apply Stress & Spike Tests

-

Inject unexpected traffic to test the resilience of APIs.

-

Check if the API is slowing down or crashing.

4. Capture Real-Time Metrics

-

Use tools such as New Relic, Datadog, or AWS CloudWatch to monitor the API performance.

-

Track the real-time measurements of response times, errors, database queries, and CPU and memory usage.

Key Metrics to Monitor

-

Response Time (average, peak, and percentile-based)

-

Throughput (requests per second)

- Error Rate (failed vs. successful requests)

- Resource Utilization (CPU, memory, network bandwidth)

Step 6: Analyze Results and Identify Bottlenecks

How to Identify Bottlenecks

-

High Response Times: Such can be the result of slow backend processing or when the servers are overcrowded.

-

Increased Error Rates: This might be the case when API requests time out or crashes.

- CPU/Memory Spikes: This could have been the result of the slow API logic or resource exhaustion.

- Database Bottlenecks: The slow queries and the absence of indexes might make response times slow.

Step 7: Optimize API Performance Based on Insights

Common Optimization Strategies

1. Database Optimization

-

Apply the query indexing method that accelerates the time of the database operations.

-

Introduce caching systems (Redis, Memcached) to reduce the load on the database.

2. Code & Architecture Improvements

-

Optimize API endpoints by removing unnecessary computations.

-

Switch to asynchronous processing for background tasks.

- Use pagination for large dataset responses.

3. Infrastructure Scaling

-

Use auto-scaling features of AWS like AWS Auto Scaling or Kubernetes.

-

Balance traffic on different APIs on the load balancers.

4. Throttling & Rate Limiting

-

Defend APIs against DDoS attacks and traffic bursts.

-

Restrict the number of requests per user to avoid server overload.

5. Re-Test After Optimization

-

Repeat the tests after the changes are made.

-

Compare the outcomes to confirm the performance improvements.

Tools for API Performance Testing

-

Postman – Mainly, this tool is applied for functional testing, but it is also capable of supporting some basic performance tests as it runs a bunch of API requests as a collection. Best test your API with small-scale functions to achieve success.

-

Apache JMeter — It is an open-source engine aimed at carrying out load and stress testing for APIs. It supports high-scale performance simulations and real-time monitoring.

- LoadRunner – This tool is used for enterprise-class performance testing using modern and interactive dashboards that provide deep insights through advanced analytics, distributed load generation, and integration with cloud environments.

- SoapUI – Designed specifically for SOAP and REST API testing, allowing testers to simulate high loads, analyze responses, and automate test execution.

-

K6 & Gatling – It is used for performance engineering due to the reusable and lightweight scripting, integration in CI/CD, and real-time performance monitoring. It is the best fit for DevOps workflows.

Key Benefits of API Performance Testing

1. Prevents Downtime & Service Failures

-

Ensures that APIs can handle heavy user loads without breaking.

-

Identifies the potential bottlenecks that will break once my production is on.

2. Optimizes User Experience

-

Reduces latency and improves response times.

-

Ensures users don’t experience slow page loads or app crashes.

3. Improves API Scalability

-

It helps businesses in the case of traffic surges in promotions, seasonal increases, or viral events.

-

The API should perform tasks in a way that keeps the performance level irrespective of the increased user demand.

4. Enhances System Reliability

-

API breakdowns can result in data loss, broken transactions, or even system outages.

-

Performance testing mitigates risks associated with infrastructure changes.

5. Reduces Infrastructure Costs

-

Identifies unnecessary resource consumption points to CPU, memory, and bandwidth.

-

Determines that is the most efficient way to run the API, i.e., cloud or server, so the costs are affordable.

Types of API Performance Testing

1. Load Testing

-

Purpose: It allows the understanding of how the API performs under the maximum traffic level.

-

Scenario: It simulates real-world traffic and checks uptime and response times to ensure the system will run smoothly under the load.

- Example: A method of verification is checking the API’s performance while 1,000 users operate the application at normal business hours.

- Why it matters: This confirms whether or not the API is capable of handling the increased load during peak times without slowing down.

2. Stress Testing

-

Purpose: The API’s capacity is tested by pushing it past normal load conditions so it malfunctions or breaks.

-

Scenario: This is a simulation where there are sudden traffic surges to see the point at which the API goes down or fails.

- Example: Testing how well an API can handle 500,000 login requests in a minute.

- Why it matters: This thwarts the API’s attempts to fail and gives it some recovery time.

3. Endurance (Soak) Testing

-

Purpose: Defines how an API will be stable over a long period under a steady load.

-

Scenario: Run a continuous API test to identify the memory leaks or performance degradation.

- Example: Running a 48-hour straight API test to investigate if the rate of response slows down over time.

- Why it matters: Identifies when there are memory leaks or when the performance drops due to application execution becoming sluggish.

4. Spike Testing

-

Purpose: We test API response in a situation where there is a sudden increase in the number of users, marketing campaigns, or flash sales, for instance.

-

Scenario: Simulating a short-term traffic increase resulting in many users accessing the rapid surging of traffic.

- Example: Checking API performance under the influence of the traffic from 500 to 50,000 in 10 seconds.

- Why it matters: It ensures the API can deal with unexpected demand without failing properly.

5. Volume Testing

-

Purpose: To assess the API performance when handling large data quantities.

-

Scenario: The app simulates mass inserts in a database or file uploads.

- Example: Testing how an API processes 100 million records at once.

- Why it matters: It guarantees the efficiency of APIs and ensures that they can function with a high level of proficiency.

Why is API Performance Testing Important?

Key Metrics for Measuring API Performance

-

Response Time – Measures how quickly the API responds to requests, including average, peak, and 95th percentile times. Lower response times enhance user experience.

-

Latency – The delay between a client request and API response, influenced by network conditions and server processing speed. Lower latency leads to faster interactions.

- Throughput – The number of requests the API can handle per second (RPS), indicating its ability to sustain high loads. Higher throughput ensures better scalability.

- Error Rate – The percentage of failed API requests, which may signal crashes, authentication failures, or system overloads. Reducing errors improves reliability.

-

Resource Utilization – Tracks CPU, memory, and network usage. High consumption may indicate inefficient code or poor server configuration, impacting scalability and cost.

-

Concurrency & Scalability – Measures how well the API handles multiple simultaneous requests. A scalable API efficiently adapts to growing user demands.

API Monitoring Strategies

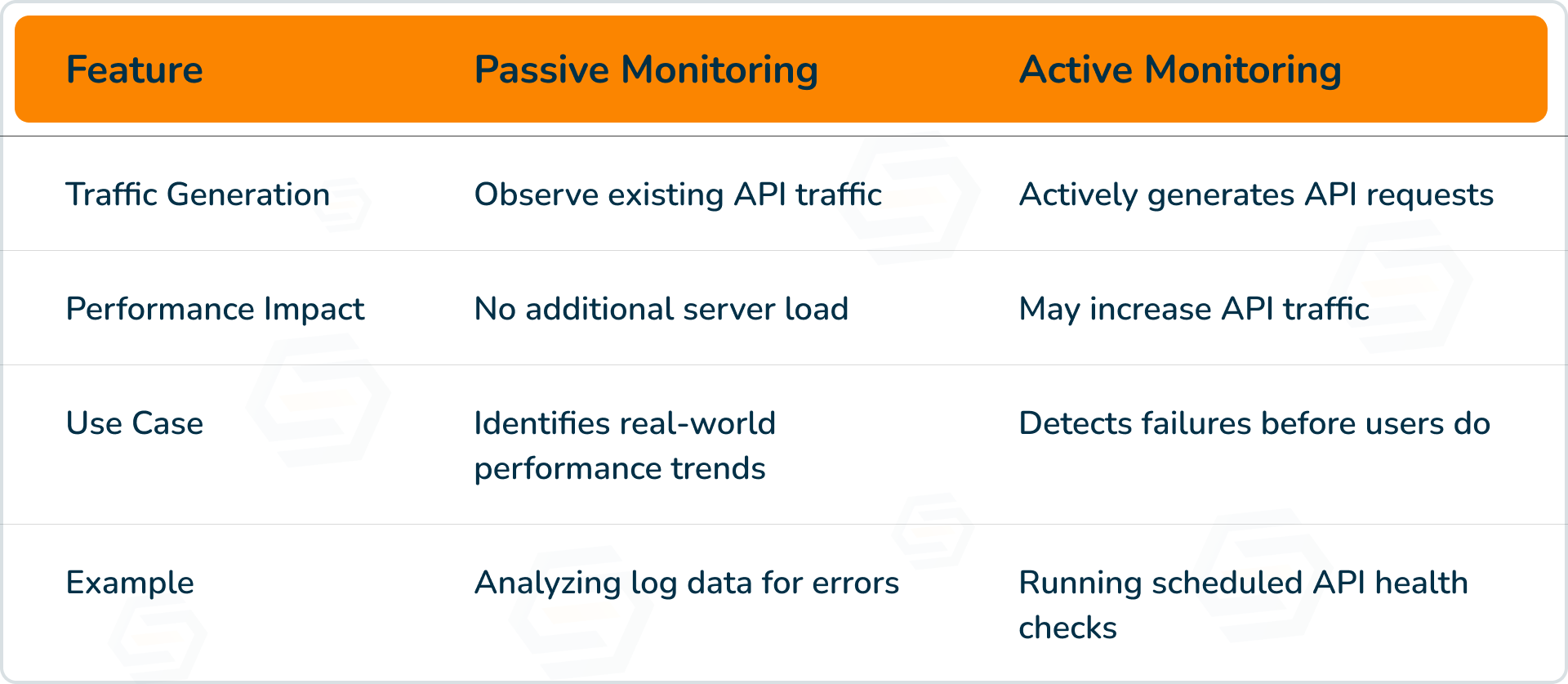

Passive API Monitoring

Key Features

-

Analyzes live network traffic and API logs.

-

It detects anomalies, slow response times, and error trends.

- Helps in troubleshooting issues based on actual user data.

Active API Monitoring

Key Features

-

Automated scripts and testing tools are used to send requests and evaluate responses.

-

It helps detect API failure, downtime, or the reason the performance is deteriorating before the users feel it.

- It can send real-time alerts when the performance goes under the set limits.

Comparing Passive vs. Active API Monitoring

Common API Performance Bottlenecks & How to Fix Them

1. Slow Database Queries

Solution

-

Improve the indexing of SQL queries.

-

Use caching (e.g., Redis, Memcached) to keep frequently accessed data in memory.

- Employ asynchronous processing for background operations.

2. High API Latency Due to Network Issues

Solution

-

Use Content Delivery Networks (CDNs) to reduce geographic latency. Here are the ways to lower latency from a geographical point of view by using CDNs.

-

Implement connection pooling to optimize API requests. 1) Make use of connection pooling to optimize your API requests.

- Reduce dependencies on slow third-party APIs by using local caching. : 2) One of the other dependencies is to get rid of slow third-party APIs by implementing the local caching technique.

3. Excessive CPU & Memory Usage

Solution

-

Optimize API logic by reducing redundant computations.

-

Fetch only the necessary data using lazy loading.

- Implementing rate limiting and throttling to stop resource exhaustion.

4. High Error Rates & Request Failures

Solution

-

Introduce the Circuit Breaker Pattern to handle failure gracefully.

-

Ensure the proper error-handling meaningful HTTP status codes as the answer to a request.

- Monitor the API logs to reveal and analyze the recurring error patterns.

5. Poor Scalability Under High Load

Solution

-

Use auto-scaling (AWS Auto Scaling or Kubernetes).

-

Meanwhile, running and deploying load balancers to distribute traffic among multiple servers might help the situation.

- Optimize the API for horizontal scaling by configuring it to be stateless.

Integrating API Performance Testing in CI/CD Pipelines

Why Automate API Performance Testing in CI/CD?

-

Early Detection of Performance Issues – Identifies the bottlenecks before deployment.

-

Consistent Testing Across Builds – Ensure that the performance is stable with every fresh update.

- Faster Release Cycles – Automates performance validation, reducing manual testing efforts.

- Reduced Production Failures – Eliminates the downtime in API because of the unoptimized code changes.

-

Scalability and Efficiency – Load tests get executed automatically, which allows for the APIs to work well with real-world access.

Steps to Integrate API Performance Testing in CI/CD Pipelines

Step 1: Choose a Performance Testing Tool

-

Select a tool that easily integrates with your CI/CD pipeline.

-

Best tools for automation: JMeter, K6, Gatling, LoadRunner.

Step 2: Configure Automated Test Scripts

-

Define test scenarios (e.g., normal load, stress, and spike testing).

-

Set performance benchmarks (e.g., API response must be under 300 ms).

Step 3: Integrate with CI/CD Platforms

-

Using CI/CD tools implementation like Jenkins, GitHub Actions, GitLab CI/CD, or Azure DevOps will save time in the workflow.

-

Automate the API Performance tests before deployment as part of pre-deployment validation.

Step 4: Run Tests on Every Code Commit.

-

Automate the run of performance testing right after any code change or pull request.

-

Perform API tests in staging environments first before conducting the deployment.

Step 5: Monitor and Analyze Test Results

-

Log all tools such as Grafana, Prometheus, or Datadog and, accordingly, supervise API performance over the selected time.

-

It will send a post when API performance gets worse or an error occurs.

Step 6: Optimize and Re-Test

-

If there are any performance issues, just optimize the API before updating it.

-

Run tests again to ensure the improvements before the deployment in production.

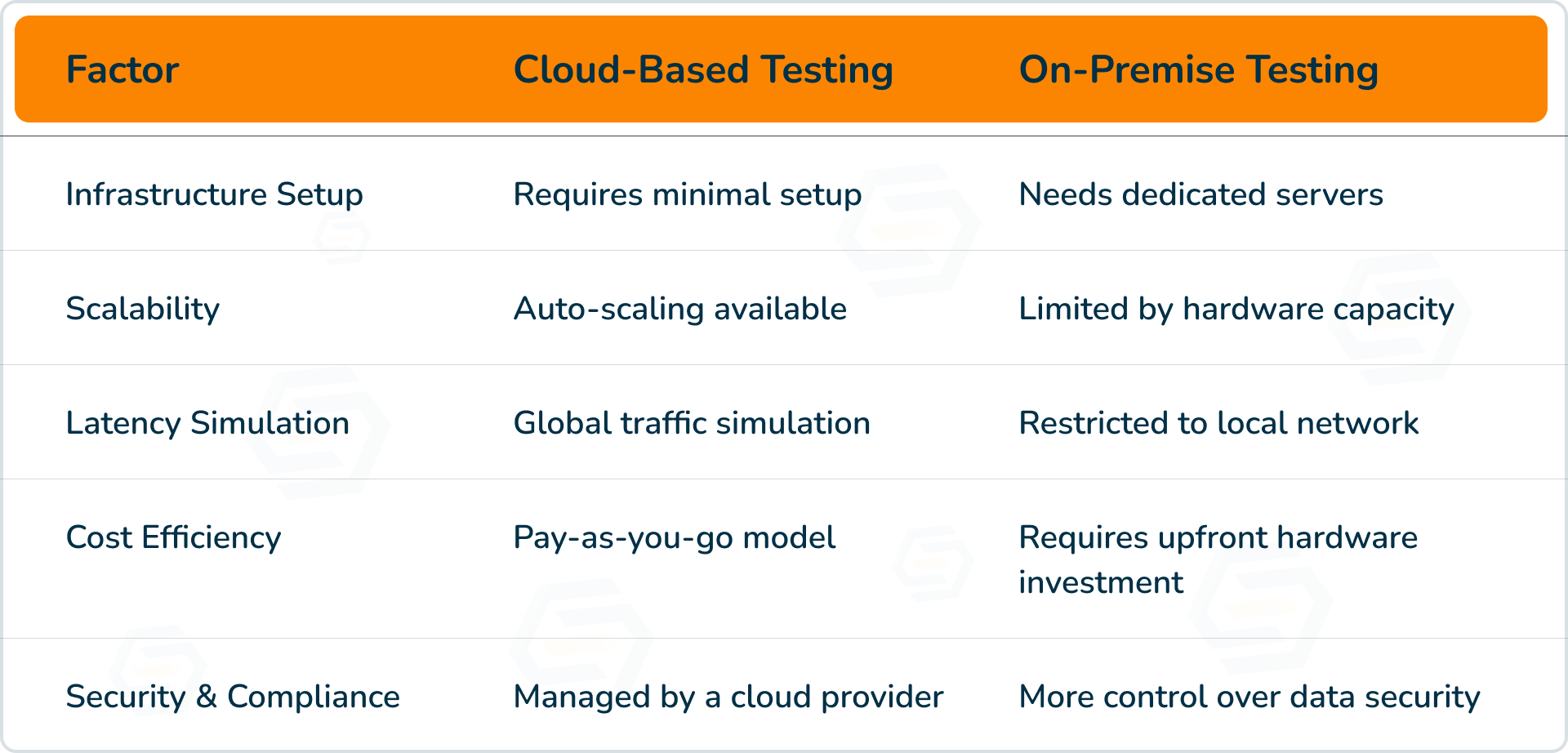

Cloud-Based vs. On-Premise API Performance Testing

Key Differences Between Cloud-Based and On-Premise API Performance Testing

Cloud-Based API Performance Testing

Advantages

-

Scalability – It handles millions of requests without infrastructure limitations.

-

Global Traffic Simulation – The application’s API latency can be tested from various geo points.

- Lower Maintenance – There is a low management obligation for the on-premise infrastructure.

Challenges

-

Dependency on Cloud Providers – Cloud service limitations may impact the performance of the application.

-

Higher Long-Term Costs – Over time, cloud technology can become costly, making budgets go up.

Best for

-

APIs that offer low-latency access to users around the world.

-

Applications using serverless architectures (AWS Lambda, Google Cloud Functions).

On-premise API Performance Testing

Advantages

-

More Control – Control over the data protection and the security of the infrastructure.

-

Predictable Costs – No need to pay for additional servers.

- Stable Testing Environment – There won’t be any problems due to the fact that your network can be disconnected from the internet.

Challenges

-

Limited Scalability – Procuring extra servers for large-scale tests is a major scalability challenge.

-

Higher Setup Complexity – Needs IT teams to maintain infrastructure.

Best for

-

Companies that adhere to strict security policies (such as banks, medical institutions, and government agencies).

-

APIs are designed for internal applications where cloud scalability is not required.

Choosing the Right Approach

-

It is a must to use cloud-based API testing if your application is for global users or is based on the cloud and/or serverless architecture.

-

If security and internal data privacy are of utmost importance, then use On-Premise API Testing.

- Hybrid Approach: Some companies run initial tests in the cloud and final security validation on-premise before deployment.

Ensure Reliable API Performance with the Test scenario

-

Detect and eliminate bottlenecks before they impact users.

-

Optimize API response times and resource utilization.

- Ensure seamless scalability for future growth.

- Automate performance validation within your CI/CD pipeline.

Keyur Kinkhabwala

QA Manager

Reply